Implementing A/B Tests in Python

A quick guide to experiment design and implementation

A/B testing is one of the most important tools for optimizing most things we interact with on our computers, phones and tablets. From website layouts to social media ads and product features, every button, banner and call to action has probably been A/B tested. And these tests can be extremely granular; Google famously tested “40 shades of blue” to decide what shade of blue should be used for links on the Google and Gmail landing pages.

I recently completed the Udacity course “A/B Testing by Google — Online Experiment Design and Analysis” and wanted to share some of my key takeaways as well as how you can implement A/B testing using Python.

What is A/B Testing?

A/B testing is a general methodology used online when testing product changes and new features. You take two sets of users, show one set of users the changed product (experiment group) and show the second set of users the original product or set of features (control group). You then compare the two groups to determine which version of the product is better.

A/B Testing is important because it:

- Removes the need for guessing or relying on intuition

- Provides accurate answers quickly

- Allows for rapid iteration on ideas

- Establishes causal relationships — not just correlations

A/B testing works best when testing incremental changes, such as UX changes, new features, ranking and page load times. Generally there needs to be a material amount of users that enable statistical inference and experiments that can be completed within a reasonable amount of time.

A/B testing doesn’t work well when testing major changes, like new products, new branding or completely new user experiences. In these cases there may be novelty effects that drive higher than normal engagement or emotional responses that cause users to resist a change initially. The re-branding of Instagram is a great example of a bad use case for A/B testing which initially caused a major negative emotional response. Additionally, A/B tests on products that are purchased infrequently and have long lead times is a bad idea — waiting to measure the repurchase rate of customer’s buying houses is going to blow out experiment timelines.

Designing an A/B Test

1. Research

Before diving into any experiment, do some research. Research can help you design an experiment, ensure you use best practices and may even unearth results of similar experiments. There are several useful data sources that may help design or support an experiment:

- External Data and Research: the adage “if you’ve thought of it, someone’s probably tried it” is true for experiments; doing some research may reveal the insights you need or at least provide ideas for experiment design

- Historical Data: retrospective analysis is cheap and easy and can provide insight into historical correlations (but not causation!)

- User Experience Research: actually getting to see how a user interacts with a product can be invaluable for setting up and testing an experiment

- Focus Groups: while not accessible for most analysts, focus groups can be great for testing major changes and new products

- Surveys: surveys can be effective for gathering information that is not revealed online

2. Choose Metrics

Metric choice is, unsurprisingly, fundamental to successful A/B testing. Many common experiments have clear, best practice metrics to use however every company faces its own nuances and priorities that should be considered when choosing metrics; developing the metrics should leverage experience, domain knowledge and exploratory data analysis. My experience is that metrics work best when cascaded top-down from a company or business unit strategy. This way there is a clear link between the goals of the experiment and the goals of the company. This approach means experiments can anchor to one or two clear metrics (e.g. customer conversions or number of active users) that are simple to report and understandable to all stakeholders.

Having one or two key metrics is usually not enough though. Most decision makers will want to understand what has driven change, and so key metrics should be supported by more detailed metrics that help explain results. One framework that can provide this explainability is tracking metrics across the user journey or customer funnel. Having metrics across these frameworks helps clearly identify where changes have occurred and where additional experimentation and attention could be focused.

Four categories of metrics should be considered when designing an experiment, namely:

- Sums and counts — e.g. how many cookies visit a webpage

- Distributed metrics such as mean, median and quartiles — e.g. what is the mean page load time

- Probability and rates — e.g. clickthrough rates

- Ratios — e.g. ratio of the probability of a revenue generating click to the probability of any click

3. Define Metrics

Once you have decided on a set of metrics, you then need to specifically define the metrics. This step seems simple on the surface until you begin to dig into your metrics. For example, the number of active users is a core metric used by most technology companies — but what does this actually mean? When defining this metric some considerations would include:

- What is the time period for a user to be active? Is someone active who used the product today, in the last 7 days, in the last month?

- What activity constitutes active? Is it logging in, spending a certain amount of time using the product? What if the activity is only an automatic notification?

- Does how active the user is matter, e.g. one user spends 10 seconds per day vs another user who spends 5 hours per day?

- Are we measuring paying and non-paying users differently?

Many metrics have industry defined standards which can be leveraged for experiments, but these questions need to be fleshed out upfront so that everyone is clear on exactly what is being considered and how to implement the experiment.

4. Determine Sample Size Required

Next we want to figure out how many data points will be required to run an experiment. There are four test parameters that need to be set to enable the calculation of a suitable sample size:

- Baseline rate — an estimate of the metric being analyzed before making any changes

- Practical significance level — the minimum change to the baseline rate that is useful to the business, for example an increase in the conversion rate of 0.001% may not be worth the effort required to make the change whereas a 2% change will be

- Confidence level — also called significance level is the probability that the null hypothesis (experiment and control are the same) is rejected when it shouldn’t be

- Sensitivity — the probability that the null hypothesis is not rejected when it should be

The baseline rate can be estimated using historical data, the practical significance level will depend on what makes sense to the business and the confidence level and sensitivity are generally set at 95% and 80% respectively but can be adjusted to suit different experiments or business needs.

Once these are set, the sample size required can be calculated statistically using a calculator such as this.

5. Finalize Experiment Design

Armed with well-defined metrics and sample size requirements, the final step in experiment design is to consider the practical elements of conducting an experiment. Some things to keep in mind for this step is deciding:

- When the experiment should run (e.g. every day or only business days) and how long the experiment should run (e.g. 1 day or 1 week)

- How many users to expose to the experiment per day, where exposing fewer users means longer experiment time periods

- How to account for the learning effect i.e. allow for users to learn the changes before measuring the impact of a change

- What tools will be used to capture data, such as Google Analytics

Before running any experiments you should also conduct some tests to make sure there are no bugs that will throw experiment results, such as issues with certain browsers, devices or operating systems. It is also very important to ensure users are selected randomly when being assigned into the control and experiment groups.

Implementing an A/B Test using Python

The real difficulty in conducting A/B tests is designing the experiment and gathering the data. Once you have done these steps the analysis itself follows standard statistical significance tests. To demonstrate how to conduct an A/B test I’ve downloaded a public dataset from Kaggle available here, which is testing the conversion rates of two groups (control and treatment) exposed to different landing pages. The dataset comprises twenty-nine thousand rows of datapoints, access to the Python code outlined below can be found on my Github here.

For this example, I’ll test the null hypothesis that the probability of conversion in the treatment group minus the probability of conversion in the control group equals zero. And set the alternative hypothesis to be that the probability of conversion in the treatment group minus the probability of conversion in the control group does not equal zero.

1. Clean Data

As with any analysis, the first step is to clean up the data that has been collected. This step is important to ensure that the data aligns with the metric definitions determined during the experiment design.

You should start by running some sanity checks to make sure the experiment results reflect the initial design, e.g. did roughly an equal number of users see the old and new landing page or are the conversion rates in the realm of possibilities.

I found one issue in this dataset, that some users in both groups were exposed to the wrong landing page. The control group should have been exposed to the old landing page while the treatment group should have been exposed to the new page. I dropped the almost 4,000 rows where this issue was present.

2. Input Test Parameters and Check Sample Size is Large Enough

As mentioned in the “Designing an A/B Test, Section 4 — Determine Sample Size Required” there are four test parameters to set: the baseline rate, practical significance level, confidence level and sensitivity. I used the control group probability as a proxy for the baseline significance level and set the practical significance level, confidence level and sensitivity to 1%, 95% and 80% respectively. Using these values I calculated the minimum sample size required for each test group to make sure there was sufficient data to draw statistically robust conclusions.

The minimum sample size of 17,209 is slightly less than the 17,489 users in the control group and 17,264 users in the treatment group and is therefore sufficient to conduct the hypothesis testing.

3. Run A/B Test

Finally, we can run the A/B Test, or more specifically, test whether we can reject the null hypothesis (that the two groups have the same conversion rate) with 95% confidence. To do this we calculate a pooled probability and pooled standard error, a margin of error and the upper and lower bounds of

the confidence interval. If these terms are unfamiliar its worth working through the modules in the Udacity course about A/B Testing.

In this example the confidence interval is between -0.39% and 0.08%. Given the minimum level defined in the practical significance level is 1%, we could only reject the null hypothesis if the confidence interval lower bound was above 1%. Therefore we cannot reject the null hypothesis and conclude which landing page drives more conversions.

4. Reducing random chance and managing small sample sizes

The above approach should provide a starting point for conducting A/B testing in Python, however you should be mindful that as you begin running more and parallel experiments a 95% confidence interval will sometimes reject the null hypothesis due to random chance. Another issue that can arise will be when you may be unable to gather sufficient data points, such as on a low traffic website.

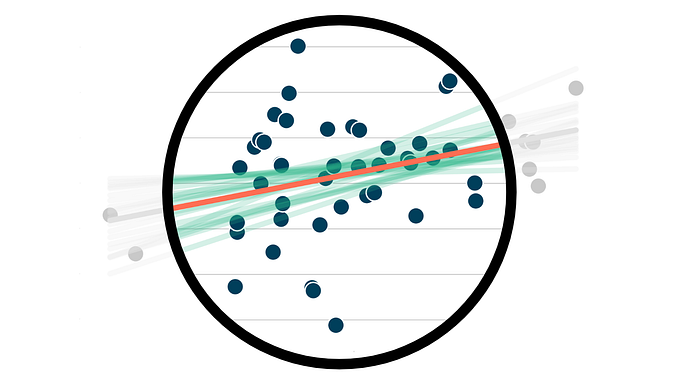

One solution for this is to use bootstrapping, a technique that samples the original dataset with replacement to create new and unique datasets. It assumes that the original dataset is a fairly good reflection of the population as a whole so therefore sampling with replacement roughly simulates random sampling from the population.

By bootstrapping the data, you can run the same test multiple times to reduce the chance of randomly rejecting the null hypothesis. Additionally, it can enable you to create larger sample sets that may be needed for hypothesis testing.

Support me by buying my children’s book: mybook.to/atozofweb3

If you’d like to expand your understanding of A/B testing further, see my blog: How to run better and more intuitive A/B tests using Bayesian statistics.